If Not Smarter then Smaller!

I've long maintained a list of "things you only buy once, in life." On that list are items like: a pool, a boat, a ferret, a hot tub and an RV. We call them RVs (recreational vehicles); others call themmotorhomes. While the story on how we acquired one is interesting, it's not the topic of this post. This post is another "remind me how I did that?" post.

This post is about doors. Making doors. Making frame and panel doors. Making cope and stick doors. Because I had to make another door this weekend and I do it so infrequently, I forget the steps involved.

First a little context on how door making relates to the motorhome. On our vehicle, the original bathroom door was a three panel, tambor style door. Tambor style doors are those doors you'd find on an old roll-top desk. The tambor doors that work well are made of many (dozens) of small, thin strips of wood. Our RV door, as mentioned, had three panels and didn't work well at all.

I had made some repairs but finally reached the conclusion that diminishing returns had long since set in and it was simply time to replace it with a real door. A door door. A door door with a handle and a hinge. A door door that swings.

Without further ado, I'm writing down the steps I take to make a door.

Step 0 - The Router Bits

Frame and panel router bit sets make the work much easier. I currently own two bit sets: one from Infinity Tools, their Shaker Bit Set and the other from MLCS Woodworking - their Katana Matched Rail and Stile Bit Set - Ogee router bit set.

The Katana bits were the closest match to the profile of the existing cabinets in the RV so they got the nod.

These bits are also called "cope and stick" bits by some other woodworkers. One bit, called the stick bit, makes the stick cuts. The other bit makes the cope cut. This other bit is called - wait for it - the cope bit.

Remembering which one is which isn't easy for me.

Step 1 - The Spreadsheet

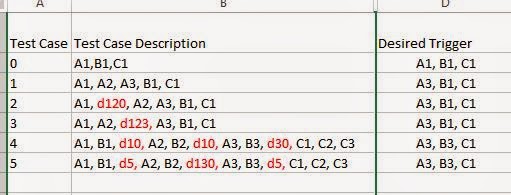

The math behind a door is detailed enough that it's worth using a computer to figure things out. There are programs out there that are dedicated to door making, but the the math isn't complicated enough to warrant spending the money. It can be done in a spreadsheet. I made one and I keep it on on Google Docs.

Rail and Stile Calculations Sheet

Here's the output of the spreadsheet for this project. The finished door will be 22 1/4" wide by 73" tall. It'll be a two-panel door. The rails and stiles of the existing cabinets are 2 1/8" wide, so we'll use the same width for the door.

The bottom rail will be 4" tall to give the door some visual weight.

For the math to all work out, it's important to know the depth of the panel groove that'll

be cut by the bit. For my Katana Ogee bit set, it's a 3/16" deep groove.

For the math to all work out, it's important to know the depth of the panel groove that'll

be cut by the bit. For my Katana Ogee bit set, it's a 3/16" deep groove.It'll change from bit set to bit set.

And, it does affect the size of the stock milled, so be sure and understand the depth of the groove cut by your bit set.

Step 2 - Stock Prep

Not much to say here that you don't already know. Mill your stock (joint, plane) to 3/4" thickness. Rough cut rails and stiles long - as the router bit can get a bit wonky on the ends. It's easier to trim off the wonkiness AFTER the rails and stiles are routed. Rip your rails and stiles to the finished width (the 2 1/8" width for top and middle rails and the stiles. 4" for the bottom rail).

Not much to say here that you don't already know. Mill your stock (joint, plane) to 3/4" thickness. Rough cut rails and stiles long - as the router bit can get a bit wonky on the ends. It's easier to trim off the wonkiness AFTER the rails and stiles are routed. Rip your rails and stiles to the finished width (the 2 1/8" width for top and middle rails and the stiles. 4" for the bottom rail).Note that I also have plenty of scrap pieces left over. They'll come in handy for test cuts and setup.

Step 3 - Stick It

Make the stick cuts first; the cope cuts are last. Mount the stick bit in the router table. This is the bit with the bearing on the top. If you're lucky enough to have made, or bought, a setup block then use it to set the height of the bit.Bring the router fence in and make the fence and the bearing flush.

Using scrap, make some test cuts. When satisfied, make all of the stick cuts on the rails and stiles. Remember, if you care, that the face side goes DOWN on the router table.

Step 4 - Cut Rails to Exact...

Cut them to the finished width (or length as I realize I'm overloading the use of "width"). Before you cope the ends, the rails must now be cut down to their final widths. In my case that was 18 3/4" and I use my Incra Miter 1000 sled to ensure all rails are cut to the same dimension.Step 5 - Cope Cut Rail Ends

I use a home-made sled when coping the rail ends. The sled is 1/2" hardboard with a 90* strip of scrap as the fence and scrap as the backer material to prevent blowouts.Mount the cope bit (the bit with the bearing in the middle). Set the height, remembering the height of the sled. As before, bring the router fence in and make the fence and the bearing flush.

Make a test cut on some scrap and assemble the rail and stile -- face up. If the rail is proud of (higher than) the stile, lower the bit and retest.

Carefully make the cope cuts. Keep the stock square and tight to the fence.

Go slow. Use a piece of scrap as a backer.

Step 6 - Check the Fit

Step 7 - Make Your Panels

I had some leftover Quarter Sawn White Oak (QSWO) veneer, so I used that for the panels. While a J-Roller works, a vacuum press will save you some sweat and shoulder pain.This veneer was also "PSA enabled" - peel and stick. Which means an adhesive had already been applied to the backing. When using other veneer, I've used Plastic Resin glue with great results.

[ No, I'm not veneering the back sides. Sue me. ;) ]

Step 8 - Trim Panels

I recommend double checking everything - trim some scrap and test fit before ripping your panels to width. Once the width is OK, cross cut the panels to final length.

And test the fit. In my case, the veneer added enough thickness to the panel to make the fit a bit too tight.

So a dado blade and a thin cut on the backside removed a few thousandths of material to make the fit 'just right'.

You want the panel to slide into the groove with no binding. And you want a tight, rattle free fit.

Step 9 - Test Assemble

Check everything. Don't glue yet - finishing is much easier before the door is assembled.Step 10 - Finish

When you first get into woodworking, you'll hear others grumble about how they hate finishing or detest sanding. It's true. Finishing sucks. And, it's as much work as the wood working (woodworking work?) part.

Matching colors is usually difficult. So, I've learned two things: one, the stain colors on the cans won't come close to the color you'll get in the end and two, keep the lights dim and you won't notice.

Lowes was close by, they had new product on the shelf that advertised faster drying time so I gave it a shot. I went with a quart of Rust-Oleum's Ultimate Wood Stain - Wheat color. Interestingly enough, I don't see that shade, Wheat, on any website.

In any event, the panels, rails and stiles were stained. Then, when dry, got two coats of General Finishes Arm-R-Seal Gloss. With a 400 grit light polish between coats to knock off the nibs.

Two final coats of General Finishes Arm-R-Seal Satin to knock the shine down and we're ready to assemble..

Step 11 - Assemble

Glue, clamp and square.Voila. Finito.

Since I expect the door to get some abuse, 23g pins are put through the tenons